Research

Dr. Mao’s research interests mainly focus on the fields of advanced computing systems, such as cloud computing, data-intensive platforms, quantum computing and quantum-based applications.

We develop algorithms to improve the performance of existing systems and propose novel system architectures to address practical issues in industry. Specifically, Dr. Mao’s investigate the following research problems.

-

Quantum systems and applications: we develop algorithms based on the utilize quantum bits (qubits) to improve the classic applications, such as deep neural networks (Tensorflow Quantum and Qiskit).

-

Cloud systems and applications: we build efficient cluster management algorithms for virtualized computing platforms, such as Docker and Kubernetes, to efficiently schedule the resources and improve the performance.

Quantum systems and applications

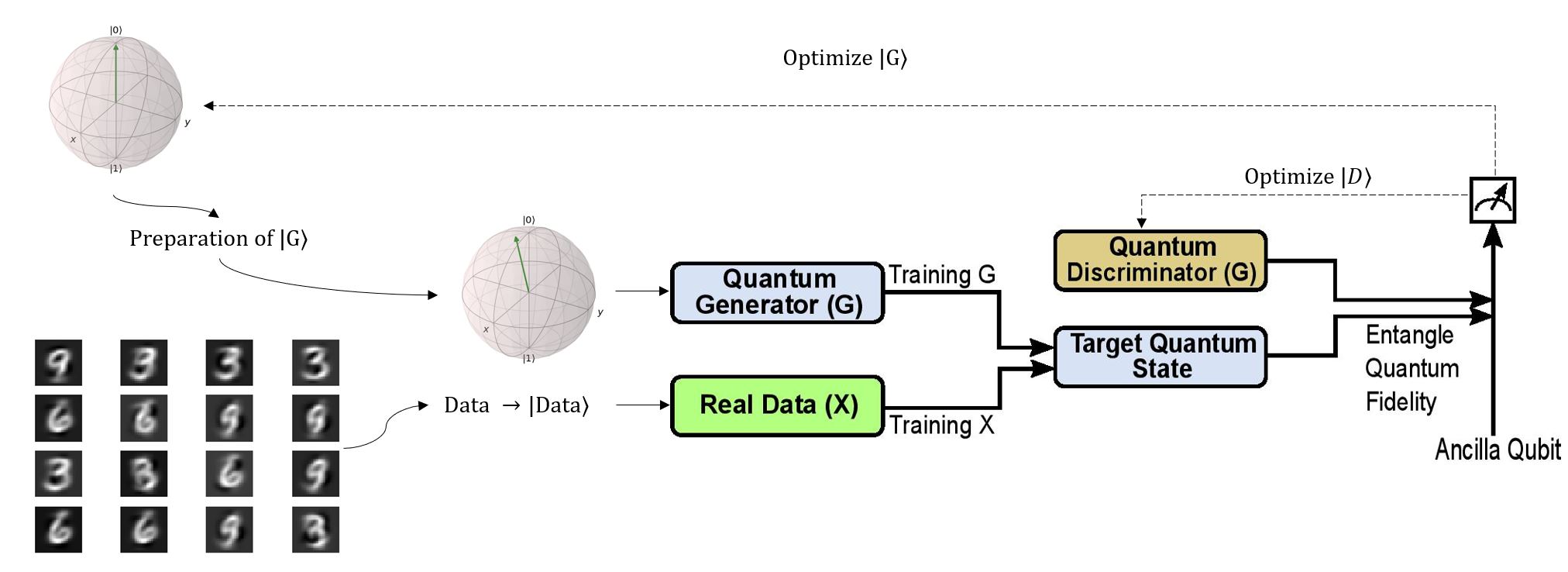

- We developed a Quantum GAN (QuGAN), a Generative Adversarial Network (GAN) through quantum states, to improve the important and successful deep learning applications (e.g. Selfies to Emojis, 3D Object Generation, and Face Aging). With the potential quantum speedup, we designed and implemented QuGAN architecture that provides a stable convergence of the model trained and tested on the real datasets. Compared to classical GANs and other quantum-based in the literature, QuGAN achieved significantly better results in terms of training efficiency, up to a 98% parameter count reduction, as well as the measured similarity between the generated distribution and original distribution, with up to a 125% improvement.

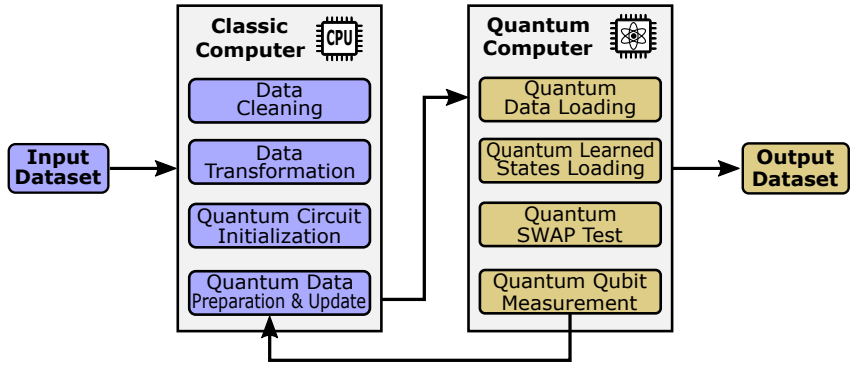

- From a practical system point of view, we proposed QuClassi, a hybrid quantum-classical deep neural network architecture for classification problems (e.g. face recognition). With a limited number of qubits, our novel system is able to reduce 95% of the size of the classic learning models. To the best of our knowledge, QuClassi is the first practical solution that tackles image classification problems in the quantum setting. Additionally, comparing QuClassi to the other similarly parameterized classical neural networks, QuClassi outperformed them by learning significantly faster (up to 53%) and achieved up to 215% higher accuracy in our experiments. Besides experiments on local simulators, we conducted our experiments on real quantum computers, IBM-Q Experience, to evaluate the proposed system.